This, of course, raised alarms for security researchers as it could be a slippery slope, opening the door to surveillance of millions of people’s personal devices. This is quite the turnaround from Apple’s previous stance with giving law enforcement access, especially when it famously butt heads with the FBI in 2016 where the agency requested access to a terror suspect’s iPhone. Company CEO Tim Cook said at the time that the FBI’s request was “chilling” and would undeniably create a backdoor for more government surveillance.

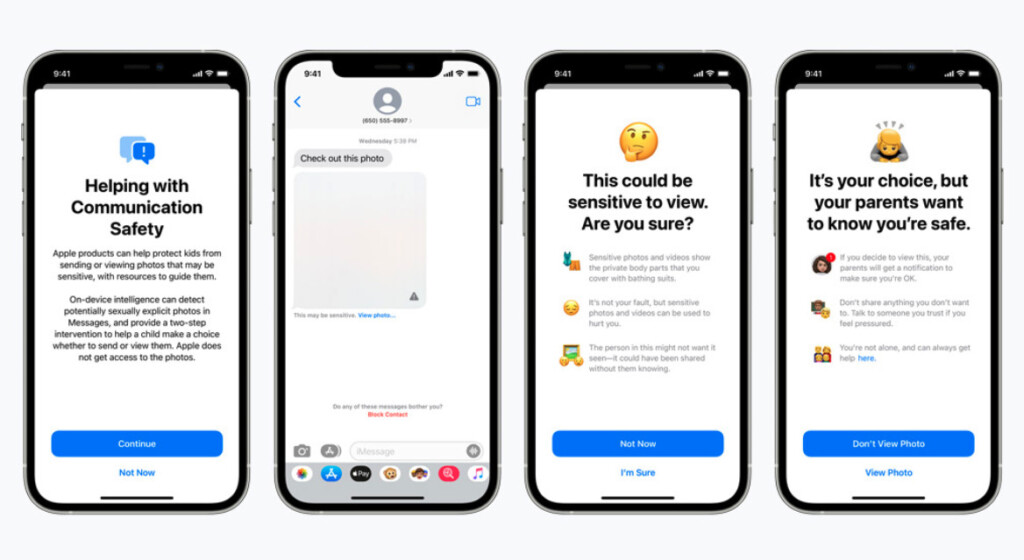

In a blog post, Apple confirmed the feature as part of a suite of child safety measures that it will be adding to the upcoming updates to iOS 15, iPadOS 15, watchOS 8, and macOS Monterey. Its main focus seems to be curbing the spread of Child Sexual Abuse Material (CSAM) online, at least on its own devices – with the three main changes will be the addition of CSAM detection to Messages, Siri and Search, and iCloud. For the time being, the CSAM update will only roll out to Apple devices in the US. In Messages, the new tools will warn children and their parents when receiving or sending sexually explicit photos. The content will be blurred and users will have to confirm if they really want to view it. The app will use on-device machine learning to analyse image attachments and determine if a photo contains CSAM, with Apple assuring that they will not have access to the messages.

CSAM detection will also be used on iOS and iPadOS to scan images stored in iCloud Photos, and will report any child abuse imagery to the National Center for Missing and Exploited Children (NCMEC), which is working in collaboration with Apple for this initiative. “Before an image is stored in iCloud Photos, an on-device matching process is performed for that image against the known CSAM hashes,” wrote Apple in its blog post. “This matching process is powered by a cryptographic technology called private set intersection, which determines if there is a match without revealing the result.”

Another technology called “threshold secret sharing” makes it so that the company can’t see the contents, unless an individual crosses an unspecified threshold of CSAM content. Should the threshold be breached, a report will be made which Apple will manually review before disabling the user’s account and sending the report to the NCMEC. Lastly, Siri and Search will be expanded to provide guidance and point users to the right resources if they wish to report potential CSAM content. In addition, both features will also intervene when users search for content or material related to CSAM. “These interventions will explain to users that interest in this topic is harmful and problematic, and provide resources from partners to get help with this issue,” Apple noted. (Sources: Apple, FT, Engadget // Image: AP/Luca Bruno)